Experimentation has long been a go-to method for improving conversions across brand sites, e-commerce platforms, and mobile apps. But in our work with clients and partners, we continue to see the same core challenge: many teams are stuck running surface-level A/B tests that don’t move the needle.

Many teams only stop at simple tests like comparing version A and version B of a page, so they sometimes get inconclusive test results with no significant difference between the two versions or only solve “simple” problems like which CTA text works better, which banner converts more, or which button color is more appealing to users.

In this article, we will share three proven tips based on insights from our Conversion Rate Optimization Specialists that consistently lead to more impactful and scalable experimentation results.

Why experimentation still matters

Experimentation remains a powerful driver of growth, helping businesses move faster, reduce risk, and improve outcomes at every stage.

- Increased conversions: A well-structured experimentation strategy delivers measurable results. One Niteco client achieved a 95% increase in sign-ups by using personalized offers tailored to user behavior. Instead of guessing what would work, the team tested variations and scaled the one that delivered real impact.

- Faster decision-making: Running controlled tests removes guesswork, allowing teams to make data-backed decisions quickly and confidently.

- Reduced risk: Instead of rolling out large changes blindly, businesses can test new ideas on smaller segments to validate impact before scaling.

- Continuous improvement: Experimentation fosters a culture of ongoing optimization, where every user interaction is an opportunity to learn and improve.

- Deeper user insights: Tests reveal how real users behave, uncovering patterns and preferences that traditional analytics may miss.

3 core pillars of experimentation

Successful experimentation isn’t about testing button colors. It’s about building systems that are scalable, trustworthy, and insight-driven. These three pillars form the foundation of any high-performing experimentation program.

1. Start using a goal tree and form an experimentation methodology

Many of our clients made the mistake of jumping right into experimentation without forming an optimization methodology and process. Experimentation is not just about what to test and whether a test is successful after running for a couple of weeks. First, ask your team: Why are you running experiments? What are the end goals? What are the metrics you will measure along the way for each test?

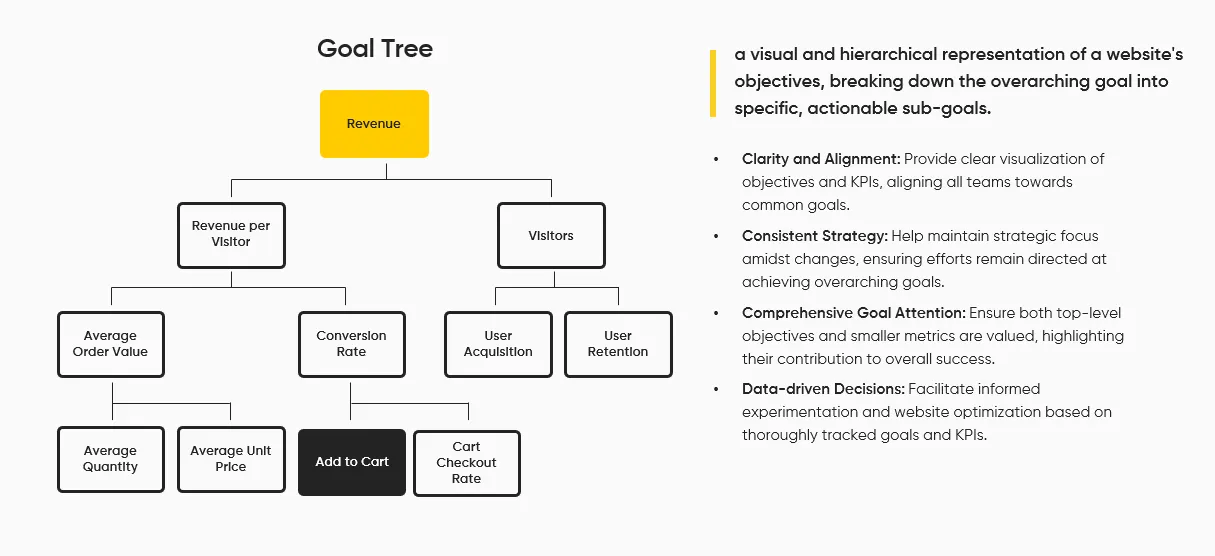

To answer these questions, build a goal tree to align your experiments with business objectives. A goal tree breaks down your top-line business goals into measurable outcomes and optimization metrics. For a deeper look at how a structured experimentation mindset drives long-term business results, see our article on why more experimentation is essential for modern businesses.

Identify your high-level company goals, your business drivers that impact the goals, and optimization metrics or specific KPIs for your tests. This helps your team focus resources on the right problems and prioritize the workload more impactfully. It’s not always easy to improve your company goals right away, but your tests can focus on the “micro-conversions” that add up to your top-level goals. Here’s an example of a Niteco goal tree:

Source: Optimizely

We also follow Optimizely’s advised experimentation cycle, which looks something like this:

Source: Optimizely

- Experimentation should actually start with “Implement”: What tool are you using to set up your tests? How can you build repeatable foundations for all your future experiments? Does your experimentation tool work well with your web/app platform? What if a test becomes successful? Would it be feasible to implement and how can you minimize efforts needed to implement a successful test?

- “Ideate” is when you build out ideas for experimentation. Leverage all the data you already have, such as current analytics, customer feedback, problems identified from previous research, or customer-focused issues backed up by data to then formulate a data-driven hypothesis.

- “Plan” all your tests for a quarter or even longer-term if possible. It’s important to take into account all your other development roadmaps and digital marketing campaigns in place during the time of running experimentation so that your results are reliable.

- “Build & QA” your experiments carefully so that the right amount of traffic accesses each version of the test and all unwanted factors that may wrongly impact the test results will be eliminated.

- “Analyze” your test results. This is one of the most difficult steps. For tests that are successful, we need a careful evaluation of whether the result is actually significant and fair, and no other element such as seasonality may make it invalid. It’s even more important to analyze inconclusive tests and ask why they were inconclusive, as well as to come up with a follow-up action plan.

- “Iterate” means to learn and build from tests results as well as use insights to inform the next experiment. It is an ideal next step but not always relevant for your experimentation cycle. If the test is for a one-time promotion campaign or a feature release that will only happen once, you can probably skip this step.

2. Do more than just A/B tests

A/B testing remains the most widely used experimentation method, it’s simple to run and quick to deliver insights. But relying solely on A/B tests can limit your optimization potential. Businesses today need to explore a broader set of testing methods to answer more complex questions and unlock deeper insights.

- A/B/n Testing

Compare multiple variations of a page or feature at once, not just two. This helps you evaluate more ideas in parallel and identify the highest-performing version faster. - Multivariate Testing (MVT)

Test multiple elements on a page (such as headlines, images, CTAs) and understand not only how each element performs individually, but also how they interact together. - Multi-Page Testing

Measure how changes across a user journey, such as from product page to checkout, impact conversions holistically, rather than one screen at a time. - Personalization Testing

Segment your audience and serve tailored experiences based on behavior, location, device type, or traffic source. This type of testing often produces larger lifts in KPIs, but it requires more planning, targeting logic, and advanced tooling. Explore this further in our guide to how personalization fuels eCommerce growth, including real examples and impact on conversion rates.

Personalized experiments and multi-step tests used to be too complex or time-consuming for most teams. But with platforms like Optimizely Feature Experimentation, many of these barriers are now lower. The updated platform supports server-side and client-side testing, streamlined variant targeting, and robust traffic management features. If you’re interested in this, talk to our experts today.

Personalize your experimentation strategy

3. Communicate your experimentation results to more people in your organization

Your experimentation program can impact everyone in your organization, from the development team to the product, customer service and marketing teams. Thus, it’s often beneficial to share your experimentation program’s plan and results to the entire organization. It helps build an “experimentation culture” – where every decision made is tested and backed with data. It also helps you gain more insights and test ideas from relevant stakeholders. Make sure you include the following when you present your experimentation program results to other groups:

- Why did you run the experiment? What was your hypothesis? What inspired the hypothesis?

- What experiment did you run? How many variations did you have? How were they different from one another?

- When did you run the experiment? For how long? How many visitors were allocated to each variation?

- What was the result? Was the result statistically significant? How did you determine the winning variation? Why do you think it won? For inconclusive tests, what do you think the reasons were?

- Lastly, share with them next steps, action plans, and your upcoming experimentation roadmaps.

Make sure you tell a story and you are very specific when telling them results. Be open to hearing second opinions, feedback and new ideas. And lastly, make it consistent! Don’t just share once and never tell anybody else outside your experimentation team about all the other tests you run.

Conclusion

Experimentation is an essential part of achieving digital success, and it’s no surprise that Optimizely is betting on more experimentation capabilities on its platform. Running experiments has become easier than ever, making it accessible – and important – for any modern digital business that wants to base its business practices on facts and numbers rather than hunches.

Most important for any business embarking on an experimentation strategy is to create an experimentation culture that permeates every level of the organization. Awareness for the importance of experimentation and an understanding for its workings are crucial in order to stay the course even in the face of adverse results.

Keeping the three tips for experimentation strategy mentioned above in mind and with Optimizely’s tools at your disposal, your experiments will be successful, affecting your bottom line immensely. If you need more advice on which experiments to run for your business or how to implement your strategy, contact us today.

FAQs

A/B testing compares two variations of a single element, while multivariate testing evaluates multiple elements and how they interact, helping you understand the combined impact on conversions.

A goal tree is a framework that connects high-level business goals to measurable optimization metrics, helping you align every test with strategic outcomes.

Personalization testing allows you to target different user segments with tailored experiences, often resulting in higher engagement and conversion rates.